Adaptive Guidance

Guided Diffusion Accelerating

Adaptive Guidance is an efficient variant of Classifier-Free Guidance that saves 25% of total NFEs without compromising image quality.

Adaptive Guidance vs. Classifier-Free Guidance

For Adaptive Guidance we keep the number of denoising iterations constant but reduce the number of steps using CFG by increasing the threshold (top). CFG simply reduces the total number of diffusion steps (bottom).

Neural Architecture Search (NAS)

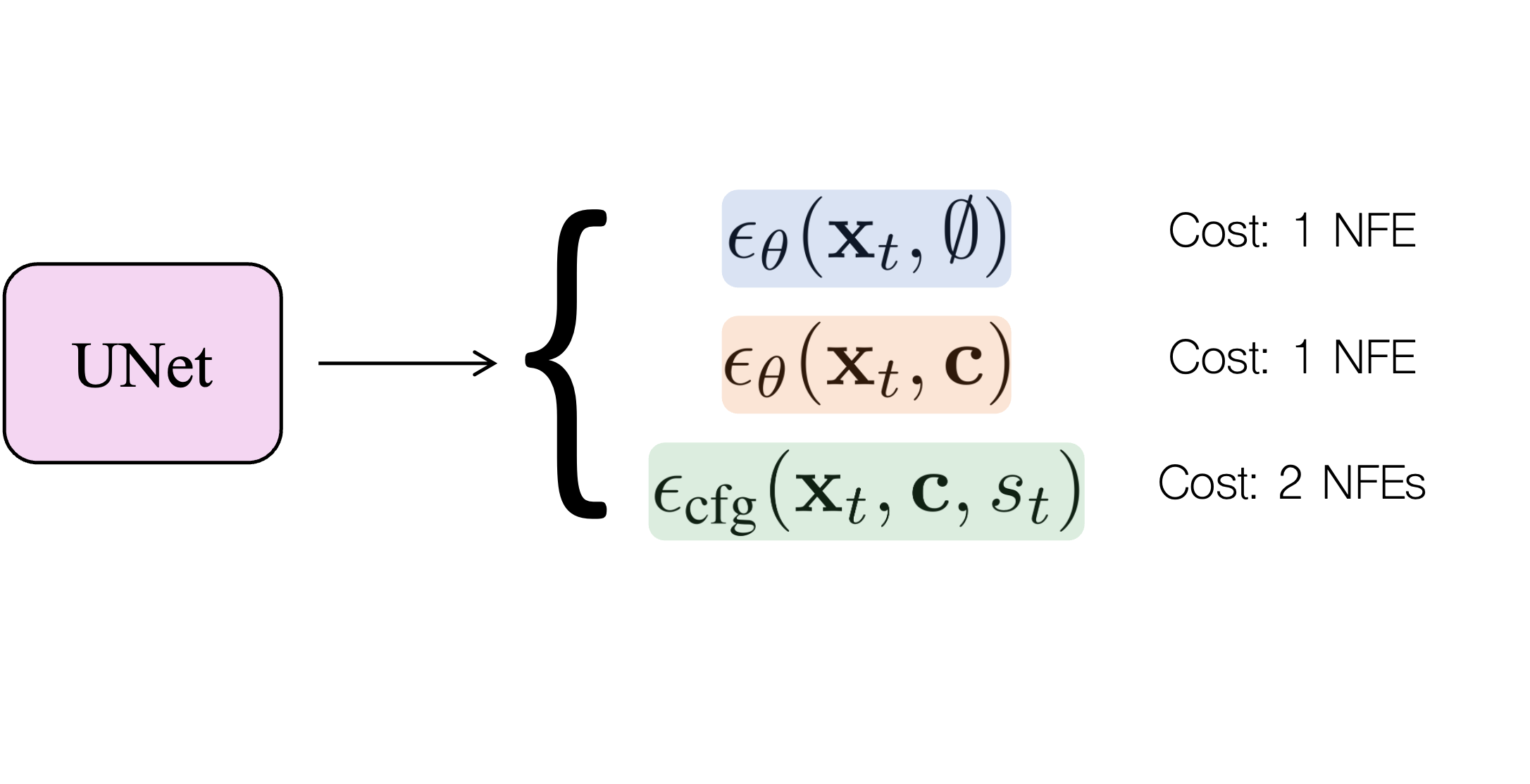

Search Space

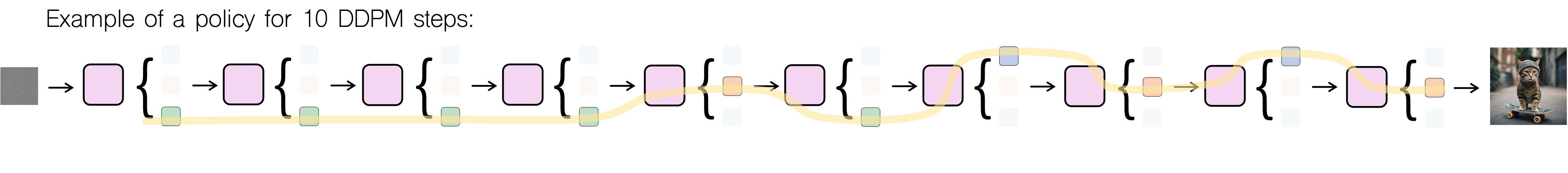

We employ techniques from differentiable Neural Architecture Search (NAS) to search for policies offering more desirable trade-offs between quality and Number of Function Evaluations (NFEs).

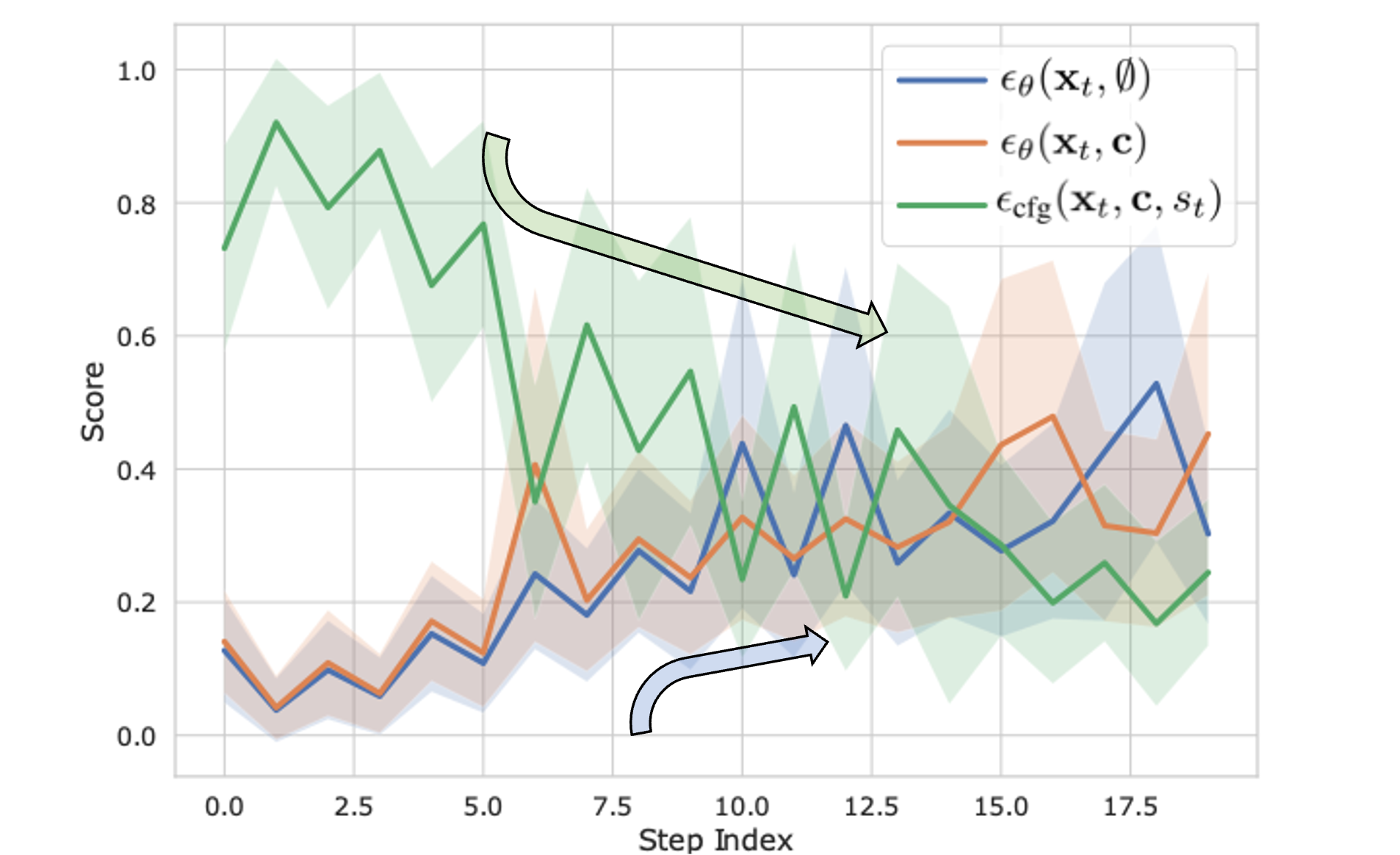

NAS Results

Search uncovers interesting pattern: CFG steps are important in the first half of the diffusion process but later become redundant.

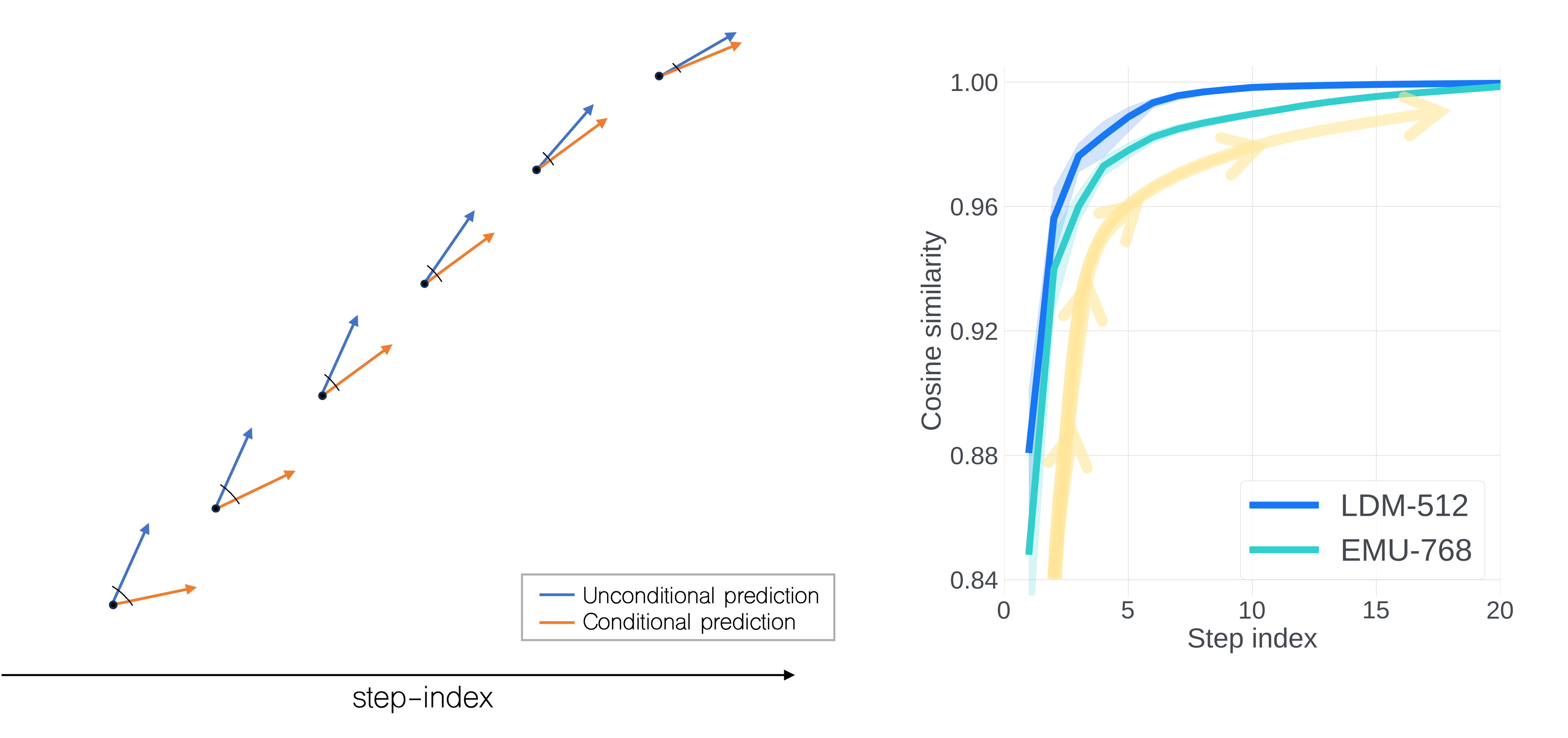

Cosine Similarity Analysis

We find that conditional and unconditional updates become increasingly correlated over time.

More Results

Negative Prompts

We also find that AG produces similar results to CFG when using non-empty negative prompts, again highlighting the importance of only the first T2 diffusion steps for semantic structure.

Image Editing

We use an instruction-based editing with EMU Edit, which builds upon InstructPix2Pix. Classic CFG editing and our method editing give equal quality results while reducing NFEs by 33.3%. Importantly, Guidance Distillation is not directly applicable for this task as the update steps are conditioned on the input image.